* This post was authored by Nancy Pontika, Lucas Anastasiou and Petr Knoth.

The CORE team is thrilled to announce the release of a new version of our recommender; a plugin that can be installed in repositories and journal systems to suggest similar articles. This is a great opportunity to improve the functionality of repositories by unleashing the power of recommendation over a huge collection of open-access documents, currently 37 million metadata records and more than 4 million full-text, available in CORE.

Highlights

Recommender systems and the CORE Plug-In

Typically, a recommender tracks a user’s preferences when browsing a website and then filters the user’s choices suggesting similar or related items. For example, if I am looking for computer components at Amazon, then the service might send me emails suggesting various computer components. Amazon is one of the pioneers of recommenders in the industry being one of the first adopters of item-item collaborative filtering (a method firstly introduced in 2001 by Sarwar et al. in a highly influential scientific paper of modern computer science).

Over the years, many recommendation methods and their variations have been suggested, evaluated both by academia and industry. From a user’s perspective, recommenders are either personalised, recommendations targeted to a particular user, based on the knowledge of the user’s preferences or past activity, or non-personalised, recommending the same items to every user.

From a technological perspective, there are two important classes of recommender systems: collaborative filtering and content based filtering.

1. Collaborative filtering (CF):

Techniques in this category try to match a user’s expected behaviour over an item according to what other users have done in the past. It starts by analysing a large amount of user interactions, ratings, visits and other sources of behaviour and then builds a model according to these. It then predicts a user’s behaviour according to what other similar users – neighbour users – have done in the past – user-based collaborative filtering.

The basic assumption of CF is that a user might like an unseen item, if it is liked by other users similar to him/her. In a production system, the recommender output can then be described as, for example, ‘people similar to you also liked these items.’

These techniques are now widely used and have proven extremely effective exploratory browsing and hence boost sales. However, in order to work effectively, they need to build a sufficiently fine-grained model providing specific recommendations and, thus, they require a large amount of user-generated data. One of the consequences of insufficient amount of data is that CF cannot recommend items that no user has acted upon yet, the so called cold-items. Therefore, the strategy of many recommender systems is to expose these items to users in some way, for example either by blending them discretely to a home page, or by applying content-based filtering on them decreasing in such way the number of cold-items in the database.

While CF can achieve state-of-the-art quality recommendations, it requires some sort of a user profile to produce recommendations. It is therefore more challenging to apply it on websites that do not require a user sign-on, such as CORE.

2. Content-based filtering (CBF)

CBF attempts to find related items based on attributes (features) of each item. These attributes could be, for example the item’s name, description, dimensions, price, location, and so on.

For example, if you are looking in an online store for a TV, the store can recommend other TVs that are close to the price, screen size, and could also be similar – or the same – brand, that you are looking for, be high-definition, etc. The advantage of content-based recommendations is that they do not suffer from the cold-start problem described above. The advantage of content-based filtering is that it can be easily used for both personalised and non-personalised recommendations.

The CORE recommendation system

There is a plethora of recommenders out there serving a broad range of purposes. At CORE, a service that provides access to millions of research articles, we need to support users in finding articles relevant to what they read. As a result, we have developed the CORE Recommender. This recommender is deployed within the CORE system to suggest relevant documents to the ones currently visited.

In addition, we also have a recommender plugin that can be installed and integrated into a repository system, for example, EPrints. When a repository user views an article page within the repository, the plugin sends to CORE information about the visited item. This can include the item’s identifier and, when possible, its metadata. CORE then replies back to the repository system and embeds a list of suggested articles for reading. These actions are generated by the CORE recommendation algorithm.

How does the CORE recommender algorithm work?

Based on the fact that the CORE corpus is a large database of documents that mainly have text, we apply content-based filtering to produce the list of suggested items. In order to discover semantic relatedness between the articles in our collection, we represent this content in a vector space representation, i.e. we transform the content to a set of term vectors and we find similar documents by finding similar vectors.

The CORE Recommender is deployed in various locations, such as on the CORE Portal and in various institutional repositories and journals. From these places, the recommender algorithm receives information as input, such as the identifier, title, authors, abstract, year, source url, etc. In addition, we try to enrich these attributes with additional available data, such as citation counts, number of downloads, whether the full-text available is available in CORE, and more related information. All these form the set of features that are used to find the closest document in the CORE corpus.

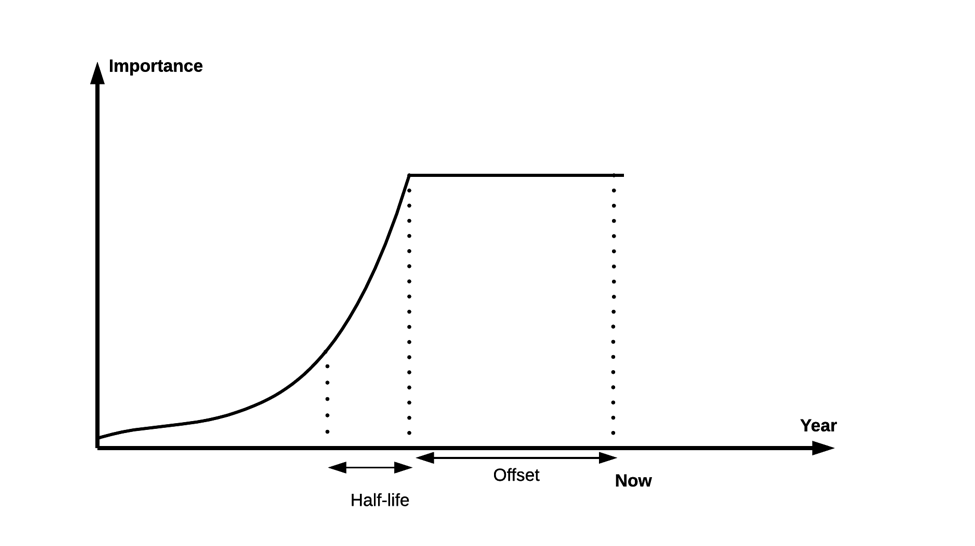

Of course not every attribute has the same importance as others. In our internal ranking algorithm we boost positively or negatively some attributes, which means that we weigh more or less some fields to achieve better recommendations. In the case of the year attribute, we go even further, and apply a decay function over it, i.e. recent articles or articles published a couple of years ago get the same boosting (offset), while we reduce the importance of older articles by 50% every N years (half-life). In this way recent articles retain their importance, while older articles contribute less to the recommendation results.

Someone may ask: how do you know which weight to put in each field you are using? How did you come up with the parameters used in the decay function? Choosing wisely these parameters is crucial for the performance of the recommendations. To tackle this issue we created an offline evaluation framework, a ground-truth, to help us make an educated choice on these parameters and tune our recommender. For this we analysed the Microsoft Academic Graph dataset, extracted its citation graph – a huge list containing relationships between cited articles – and we proceeded with the assumption that “if an article X cites article Y, then article X and article Y are similar”. We benchmarked our recommender for various sets of parameters until we reached an optimal combination that provides the most similar recommendations with respect to this assumption. To complicate things a bit further, we also experimented with another ground-truth using co-citations, i.e. the assumption that two articles are more likely to be related if they tend to be co-cited in academic documents. This assumption is further extended by taking into account the positionality/proximity of the citations within the document.

Furthermore, the CORE recommender employs several filters to improve the quality of the recommendations. These filters are: we don’t provide recommendations on items that have been taken down from our collection; we can recommend only papers with an open access full-text; only articles with at least a minimal set of metadata attributes; only articles with a generated thumbnail; and more options. In the case where the CORE recommender has been deployed in a repository platform, we can filter and recommend only articles harvested by that specific repository.

We expect, however, that in some cases the recommender might provide irrelevant or even erroneous recommendations. In this case, we offer our users the feature to report this using a feedback button as shown below. These items are then blacklisted and won’t be displayed again in the recommendation list.

In any sort of a recommender system, errors are unavoidable. In CORE, apart from introducing the feedback button, we are planning to implement “explanations” as a means to develop trust across our users. Explanations are a short text description that offers an insight of how the recommender could be justified to the user. You may have already met those in e-commerce websites where they look like:

- People who viewed [this item] also viewed;

- Customers who bought [this item] also bought;

- What do customers buy after viewing this item;

In the research publication domain these explanations could be in the form:

- The recommended article addresses the same topic (possibly even highlighting the most prominent matching terms);

- People reading this article were also interested in the recommended article;

- This recommended article is related to the reference article and comes from the same repository (when we employ the same repository filter);

- The recommended article shares a common author with the reference article;

- The recommended article provides and influential citation to the reference article;

- The recommended article is often co-cited with this article.

It is worth mentioning that the above description of how the CORE recommender works, reflects only the current state. Our plan is to constantly enhance this initial release. For instance, we will re-evaluate it and refine it, by including more signals and other sources, such as readership, citation count, popularity and research area topics, to maximise successful recommendations.

Offline evaluation in recommender systems is often not sufficiently predictive of the online experience. That means that a good offline evaluation of an algorithm instance, cannot guarantee good performance of the same algorithm when deployed in a real production system. As our feature set is expected to grow substantially in the future (the list of fields that we compare publications with) we need to carry on with the testing and fine-tuning of the performance on a continuous basis. To address this problem, we measure the activity using Click Through Rate (CTR), which is a well-established on-line evaluation metric used widely by recommender systems.

In the future, we want to transform our recommender from a pure content-based recommendation engine to a hybrid solution that can deliver personalised recommendations using collaborative filtering techniques, and capturing past user behaviour within user profiles. In order to do that, we would ideally like to be able to encourage a critical mass of people to sign on. Additionally, we are thinking about going beyond recommending articles to recommending other research entities. Example cases could be:

- discovering research funding opportunities,

- discovering potential collaborators/experts

- auto-suggesting citations to back-up claims in real time, while writing a scientific paper,

- detecting plagiarism,

- recommending research methods.

An additional step further would be to connect existing knowledge expressed in research papers, introducing new opportunities for the discovery of hidden links and relationships. This is part of the broader challenge of literature based discovery.

Conclusions

Recommender systems are widely used and have become a ubiquitous practice across a broad range of tasks. They do not only help us shift through the massive amounts of information we are surrounded by in our everyday lives, but more importantly they can help us discover useful information that we are not aware of.

Despite various technical challenges, recommenders in research have a great potential. They help users find, explore and discover research by the moment it becomes available. There haven’t been many recommenders deployed in the academic domain yet, but it is certainly a growing field. The CORE Recommender is unique in a number of aspects. Firstly, our methods rely on the availability of full-texts and are not based on abstracts or metadata only. Second, we ensure that the recommended articles are available open access, making the CORE Recommender a great choice not only for open repositories, but also for the general public. We provide our recommendation service for free, as we are extensively relying on open data, also providing it using a machine accessible interface (API).

We believe that the CORE Recommender is an example of the added value that can be delivered over the data supplied by open access journals and repositories and CORE is happy to have created this network, where information can be fed back to the community for the benefit of all.

Note: The recommender is currently accessible to a focus group and will soon be available to everyone. If you would like to try it, do get in touch theteam[at]open[dot]ac[dot]uk.

Update 27/03/2017: The recommender is now open to everyone. If you would like to try it, do get in touch theteam[at]open[dot]ac[dot]uk.

Update 23/11/2016: George Macgregor, Institutional Repository Coordinator at the University of Strathclyde wrote a blog post regarding the implementation of the CORE Recommender in Strathprints – “Strathprints now supporting the CORE Recommender“.

Update 24//11/2016: Alice Fodor, Research Publications Officer at London School of Hygiene and Tropical Medicine wrote a blog post regarding the implementation of the CORE recommender in LSHTM Research Online – “Finding similar articles via LSHTM Research Online“.