Posted 1st April 2021. You’ll probably be glad to know that, thankfully, none of what follows is actually true. We do hope however it brought even a weak smile to your REF-weary faces. We, like you, are at the behest of far greater and more powerful forces so, when and if REF2026 comes around, we’ll be hanging on for the ride too! Happy holidays to those lucky enough to get some time off over the next couple of weeks.

After REF2021, I think we all deserve a break.

The CORE team

As the submission REF2021 is now closing, this is time for us to offer you a sneak peek at the future. While supporting repository managers and offering additional help finding their way through the submission process with the CORE Repository Dashboard and the Repository Edition we also started working with Research England on what we learned from the experience and how we can improve the whole REF process.

There are a few lessons learned that are emerging from the current system:

- It’s expensive

- It is domain-based, it requires many domain experts to provide their feedback.

- It brings additional pressure on the research support team.

- It focuses only on a certain variable but not on the overall quality of the submission.

With all of these in mind, we had a series of fruitful meetings with all the interested stakeholders to design a measure that would improve the process.

We are now happy to announce that we contributed to two new research assessment metrics that will be used by REF2026 and that REF2026 will now be fully automated, removing the now obsolete peer-review process.

Specifically, these measures are:

- alphaRank

- PI index

While these might not be the only measures considered, we’ve already had confirmation that they will be the fulcrum of the new evaluation system.

Dr Petr Knoth, head & Founder of CORE, says: “These new metrics will not only significantly reduce the cost of REF2026, but they will also make future iterations of REF more stable. The market for researchers will be also made more transparent as top UK HEIs will be undoubtedly looking for researchers to maximize their alphaRank and the PI index”.

Highlights

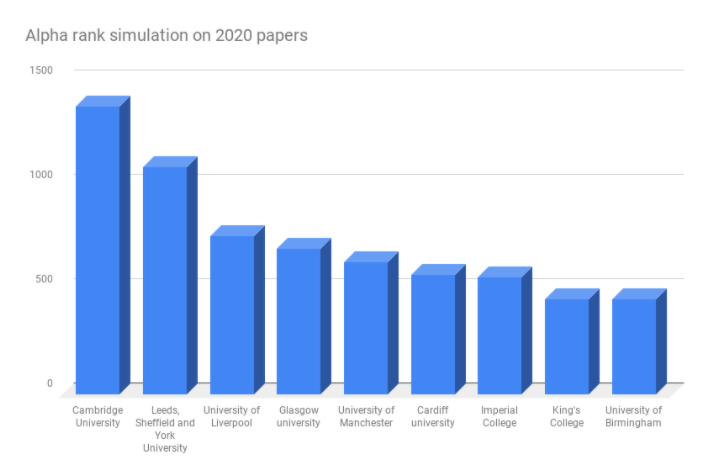

alphaRank – Rethinking submission scoring

The first measure we introduce is to help ensure a holistic approach of the HEI’s submissions, the biggest advantage of this measure is to ensure a fair and balanced ranking system that is fully automated.

The following formula is used to calculate the new alphaRank ranking for each submission thus:

author_score(author.surname) = author.surname[0].asNumber()*26 + author.surname[1].asNumber()

paper_score = Math.sum(author_score(paper.authors[]))

institutional_score = Math.sum(paper_score(institution.papers[]))

final_ranking = institutional_score.sort(order=”desc”)Our analysis reveals that there are many advantages to this metric:

- It is cheap to produce and can be fully automated.

- It favours a good alphanumeric naming of the records making life easier for librarians.

- It puts researchers at the centre of the submission.

- The problems with author positioning are immediately resolved. Everyone is treated equally unequally.

- It will have a positive impact on researchers’ mental health as it encourages them to reconcile with reality rather than to constantly fight for recognition.

- It encourages and helps build a healthy jobs market as HEIs will need to have a fair share of surnames to ensure their evaluation success.

- It might encourage the creation of unexpected new research collaborations.

To give an example, Prof Mickey Mouse would understandably be ranked higher than Dr Donald Duck, providing a certain layer of determinism and removing the need for a subjective and highly controversial peer-review process. Having said that, Zdenek Zdrahal would always be ranked the highest.

Initial results reveal that the University of Cambridge will stay at the top, while its rival Oxford University might be the biggest loser.

PI Index – Bringing Internet of Things into the REF

The Internet of things is now pervasive in many ways of our life but it’s only timidly approaching the bibliometrics world. We are now proud to reveal another index that will be used to rank paper submission in the next REF 2026. Even though we are still just in the experimental stages, we predict that our new PI* index will be the staple of the future REF submissions and will get adopted in overseas Performance-Related Funding Systems.

In our strive for full automation, we worked together with major sensor companies to deliver a device capable of measuring the PI Index autonomously. The values of the PI Index will be available immediately on the CORE Dashboard, on the REF 2026 control panel and on all the smart devices in your home.

The PI Index is calculated using a small sensor that connects directly to your colleagues involved in the submission process. By using state of the art sensors we can easily recognise the effort made in the submission and finally consider it in the REF submissions’ scores. The solution will be trialled on REF 2026 volunteers and made a requirement for the following submission cycle.

As the reader can see for themselves there are clear advantages in adopting this approach:

- It offers a real-time estimation of the effort put into the submission.

- It makes the amount of contribution of individual authors measurable, removing any ambiguity about who contributed the most.

- It promotes healthy living and is green.

- It can be easily extended with Blockchain functionalities to ensure the security of the connection.

- It’s available everywhere

- With built-in GPS functionality, it allows employers to immediately know where the researchers are all the time, allowing the maximisation of PI Index production throughout the day.

We have been also contacted by a number of prominent publishers, including Elsevier, Sprunger and Nurture and Tailors & Frencis, who have expressed interest in this technology for the purposes of simplifying the peer-review process. More specifically, any work that does not meet the required effort threshold would be desk rejected or receive major revisions until the required level of effort was reached.

In our future work, we would like to take into account also the name of the submission file in the ranking, such that “<z-surname-author>_finalfinalfinal_draft.docx” would be a strong sign of a successful submission, this will promote healthy versioning practices from the researchers to the submission process.

*Perspiration Intensity