Last Tuesday, March 3, we were privileged at CORE to welcome a leading figure in the quest for Open Access to scientific knowledge.

Last Tuesday, March 3, we were privileged at CORE to welcome a leading figure in the quest for Open Access to scientific knowledge.

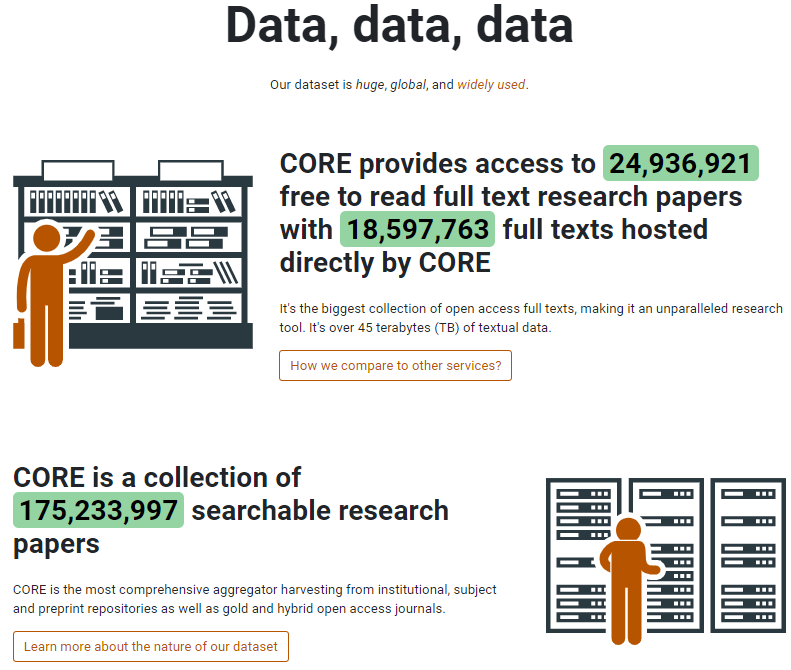

Professor Carl Malamud is a highly-regarded American technologist, author, and public domain advocate, known for his foundation Public.Resource.Org. He is on a crusade to liberate information locked up behind paywalls — and his campaigns have scored many victories. His mission is quite similar to CORE’s one, as we also work on aggregating all open access research outputs from repositories and journals worldwide and make them available to the public without hitting the paywall. Carl has spent decades publishing copyrighted legal documents, from building codes to court records, and then arguing that such texts represent public-domain law that ought to be available to any citizen online. read more...