* Post updated on June 20th and June 23rd with links to presentations.

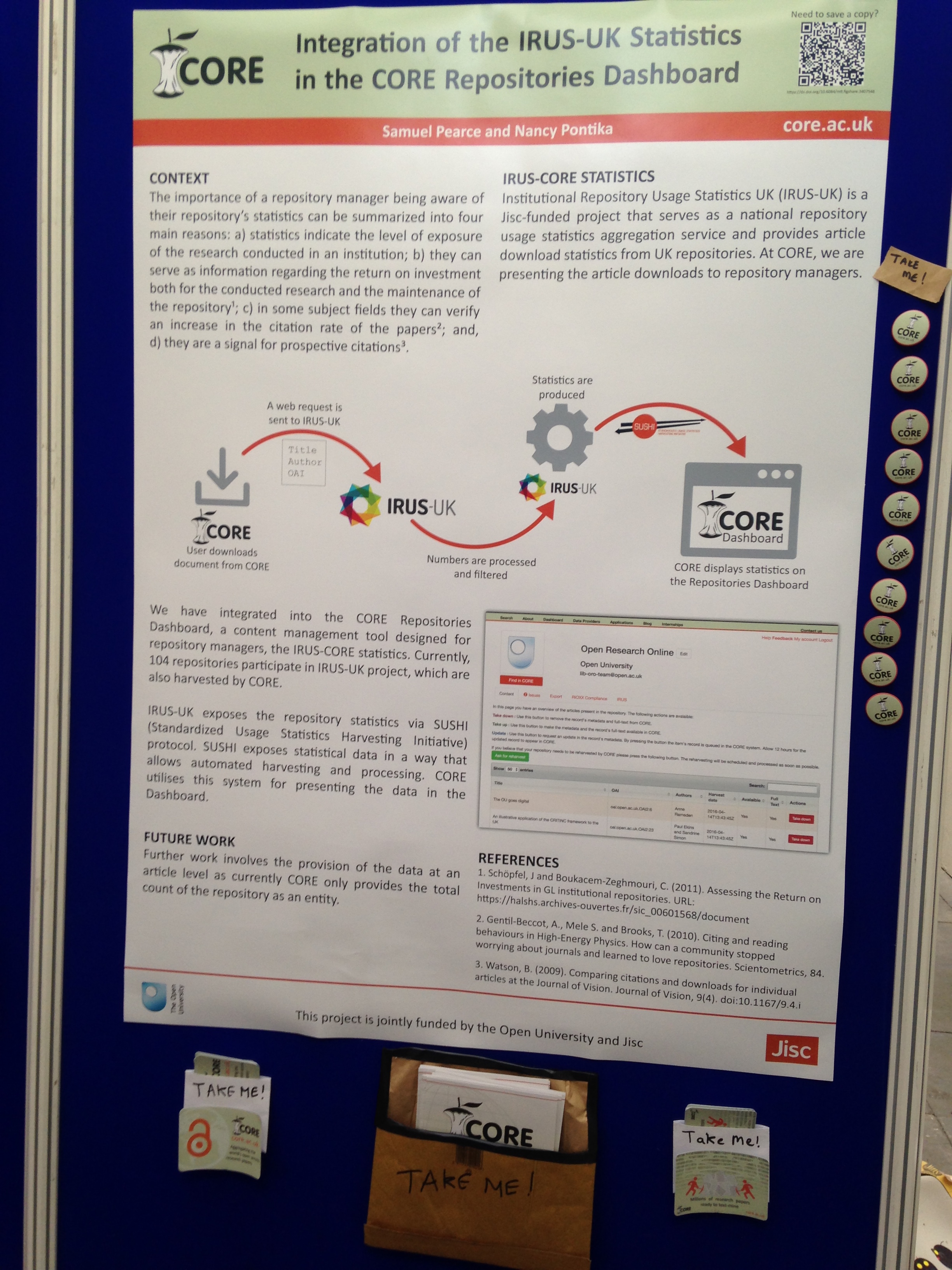

In this year’s Open Repositories 2016, an international conference addressed to the scholarly communications community with a focus on repositories, open access, open data and open science, CORE had 6 items accepted; 1 Paper, 1 Workshop, 1 Repository Rave presentation, 1 Poster and 2 showcases in the Developer Track and Ideas Challenge. The titles and summaries of our accepted proposals are:

Paper: Exploring Semantometrics: full text-based research evaluation for open repositories / Knoth, Petr; Herrmannova, Drahomira

Over the recent years, there has been a growing interest in developing new scientometric measures that could go beyond the traditional citation-based bibliometric measures. This interest is motivated on one side by the wider availability or even emergence of new information evidencing research performance, such as article downloads, views and twitter mentions, and on the other side by the continued frustrations and problems surrounding the application of citation-based metrics to evaluate research performance in practice. Semantometrics are a new class of research evaluation metrics which build on the premise that full text is needed to assess the value of a publication. This talk will present the results of an investigation into the properties of the semantometric contribution measure (Knoth & Herrmannova, 2014). We will provide a comparative evaluation of the contribution measure with traditional bibliometric measures based on citation counting. Our analysis also focuses on the potential application of semantometric measures in large databases of research papers. read more...

The

The